Help Lightning Blog

How Merged Reality and Human Gestures Speed Communication and Accuracy

Research validates that MR-enabled gestures improve communication accuracy and speed by up to 40%

When it comes to complex problem solving, verbal communication alone can often be slow and inaccurate; it relies solely on words and can be abstract and open to interpretation. People inexperienced in the field may not have learned the vernacular to accurately describe a complex problem. This can also be true for those connecting with someone who speaks another language or has an unfamiliar accent. This can lead to misunderstandings and confusion, slowing communication speed and reducing the message’s accuracy. The complexity of language without face-to-face communication leads to errors; misinterpretation occurs commonly without facial expressions, gestures, or body language. Virtual settings, those with no physical presence, lack access to essential cues. As a result, communication is hindered and decision making is slowed. Adding additional cues to gestures such as pictures and live drawings to virtual settings is important to adding context to help the listener better understand the message.

The Value of Gestures

The use of gestures, pictures, and live drawings in tandem with verbal communication enhances clarification. Gestures, for example, can convey a wide range of information and can be used to emphasize a point or provide additional context. Pictures and live drawings also can help to convey complex or abstract concepts more concretely and visually. Not only does this allow people to convey ideas more quickly, having many forms of communication through visual and verbal communication promotes accessibility. Utilizing pictures and gestures conveys complex concepts immediately- the same explanation with words alone requires added time. Adding live drawing, in particular, to a shared picture allows the speaker to show the listener exactly what they are thinking.

The Benefits of Combining Human Gestures with Merged Reality

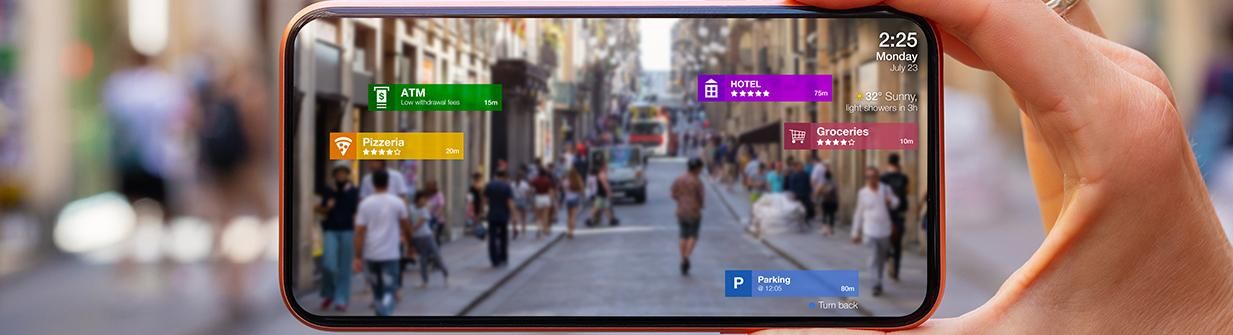

Using human gestures within a merged reality environment can improve communication and problem solving significantly. MR technology provides a space for humans to interact, verbally and visually, to collaborate side by side, as if in the same room. Merged Reality proves a new higher level of understanding and engagement can be achieved. For example, users can use gestures to manipulate objects within the virtual environment, providing a more tactile and intuitive way of solving problems around them. Including natural human gestures reduces confusion and conveys information where language lacks.

Research Supports the Power of Merged Reality

Without human gestures, communication is incomplete. Universities everywhere have conducted studies on human gestures and their importance. A University of British Columbia study that found that MR-enabled gestures can improve communication accuracy and speed by up to 40%. A study by the University of California, San Diego (UCSD) found that MR-enabled gestures can improve problem-solving speed by up to 20% (Liu et al., 2016). In addition, a study by the University of California, Irvine (UCI) found that MR-enabled gestures can improve user engagement and understanding (Wu et al., 2018). MR allowing collaboration with human gestures provides a more efficient and accurate way to communicate. Merged Reality enabled technology creates a richer experience for both those in need of and providing assistance.

Click here to schedule a live demo of Help Lightning’s Merged Reality.

Research Notes:

Kendon, A. (2004). Gesture: Visible Action as Utterance. Cambridge University Press.

Liu, J., Wang, Z., & Tao, Y. (2016). A gesture-based augmented reality system for problem solving. International Journal of Human-Computer Interaction, 32(8), 602-614.

Mayer, R. E. (2014). The Cambridge Handbook of Multimedia Learning (2nd ed.). Cambridge University Press.

McNeill, D. (1992). Hand and Mind: What Gestures Reveal about Thought. University of Chicago Press.

McNeill, D., & Duncan, S. D. (2000). Growth points in thinking-for-speaking. In J. J. Gumperz & S. C. Levinson (Eds.), Language and Social Relations (pp. 142-161). Cambridge University Press.

Mueller, S., & O’Grady, M. (2020). Augmented reality interface for gesture-based communication. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1-10).

Poyatos, F. (1997). Nonverbal Communication Across Disciplines: Paralanguage, Kinesics, Silence, Personal and Environmental Interaction. John Benjamins.

Wu, K., Schubert, E., & Chen, H. (2018). Augmented reality-enhanced gesture recognition to improve user engagement in virtual